Just what is lexomics?

The term “lexomics” was originally coined to describe the computer-assisted detection of “words” (short sequences of bases) in genomes. When applied to literature as we do here, lexomics is the analysis of the frequency, distribution, and arrangement of words in large-scale patterns. More specifically as relating to our current suite of tools we have built and use, we segment text(s), count the number of times each word or character n-gram appears in each segment (or chunk), and then apply visualization and/or analysis tools (e.g., cluster analysis to show relationships between the chunks.

Will lexomics replace me as a scholar?

Nope. Lexomics, like most computational text mining tools, are what John Burrows calls a “middle game” technique. You (the scholar) have much scholarship to do before running computational tools (for example, collecting texts, forming hypotheses as to where you might segment your texts, etc) and then following the use of the tools, you have much work to do after to interpret the results and form yet new hypotheses. The computer is just a tool “in the middle”, albeit a very powerful tool that today’s scholars of texts want in their arsenal.

Where do I start?

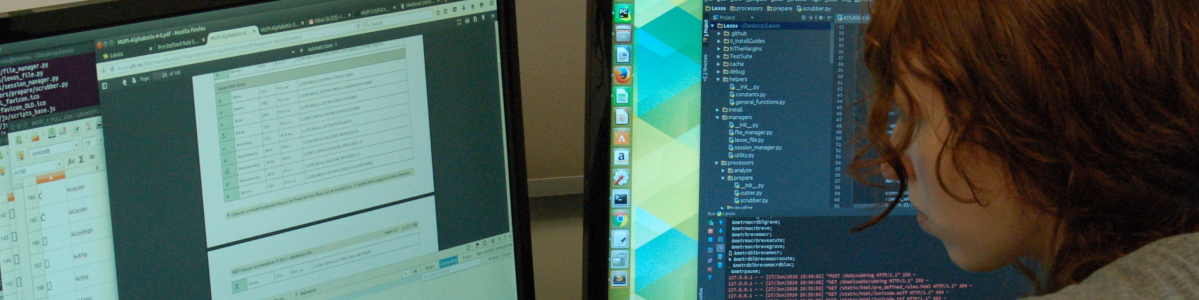

Try the deep dive! Go to Lexos (http://lexos.wheatoncollege.edu), upload a few simple raw text files, scrub the files (e.g., remove punctuation, convert to lowercase), select a token-type (e.g., count individual words, or bi-grams, etc), and then explore your texts (e.g., plot the use of a word or phrase across the entire text in our Rolling Window tool, perform a cluster analysis to see which texts are most similar, and/or find the “top words” that are most unique in a text relative to the others).

What browser should I use?

On MacOS, Windows, or Linux, we recommend that you use the Chrome browser, however, Firefox also works. Safari and Edge are not supported.

What languages (other than Old English, Latin, etc) does Lexomic analysis work with?

We have worked hard on the functionality of “scrubbing” so that it will handle texts of many file type (raw .txt, Unicode, .sgml, .html, .xml) and language, however, HTML and XML parsing are not full-fledged parsers. We recommend that your files are saved (encoded) in Unicode (UTF-8).

Is lexomics analysis in general and are dendrograms in particular unique to your research group?

No. Scholars have been counting words, clustering, and classifying texts for years. Our contribution is to build tools that make the experimental process relatively easy for scholars to perform cluster analysis (visualizing as dendrograms or Voronoi diagrams or PCA for k-means). This includes our group and our undergraduate students!

Who wrote the software for the Lexomics Tools?

Undergraduate students at Wheaton College (Norton, MA) wrote almost all the software in Lexos. Professor Scott Kleinman at UC-Northridge presently serves as software lead on the front-end. From 2015-2017, Cheng Zhang ’18 was our lead developer; Weiqi Feng ’19 was our lead in 2018 and 2019. Myles Trevino ’20 refactored our UI in 2020. See our About Us page to see our smiling faces and learn more about the community of student programmers over the years.

How do I cite use of the Lexomics tools?

The Lexomics tools are Open Source Software [MIT License]. For research use, please remember to cite Lexomics:

Kleinman, S., LeBlanc, M.D., Drout, M., and Zhang, C. (2019). Lexos v3.2.0. https://github.com/WheatonCS/Lexos/.

Have any unanswered questions?

Be sure to send us any additional questions at [mdrout at wheatoncollege dot edu].